Neural network training made easy with smart hardware

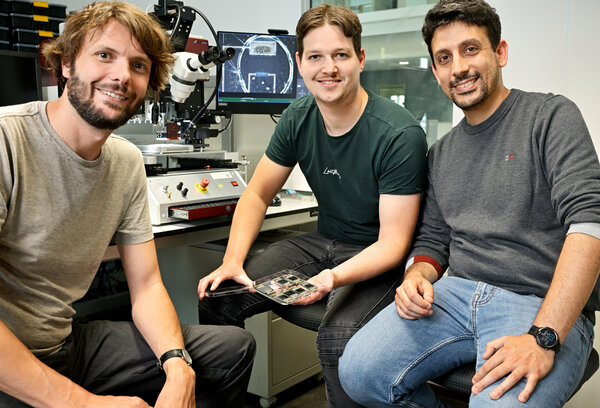

Led by Yoeri van de Burgt and Marco Fattori, ������ý researchers have solved a major problem related to neuromorphic chips. The new research is published in Science Advances.

![[Translate to English:] [Translate to English:]](https://assets.w3.tue.nl/w/fileadmin/_processed_/2/4/csm_Banner%20BvOF%202024_0709_AZA%20organic%20neuromorphic%20chip%20based%20on%20ECRAM%20devices_122cdc0125.jpg)

Large-scale neural network models form the basis of many AI-based technologies such as neuromorphic chips, which are inspired by the human brain. Training these networks can be tedious, time-consuming, and energy-inefficient given that the model is often first trained on a computer and then transferred to the chip. This limits the application and efficiency of neuromorphic chips. ������ý researchers have solved this problem by developing a neuromorphic device capable of on-chip training and eliminates the need to transfer trained models to the chip. This could open a route towards efficient and dedicated AI chips in the future.

Have you ever thought about how wonderful your brain really is? It’s a powerful computing machine, but it’s also fast, dynamic, adaptable, and very energy efficient. You should feel quite lucky!

The combination of these attributes has inspired researchers at ������ý such as Yoeri van de Burgt to mimic how the brain works in technologies where learning is important such as artificial intelligence (AI) systems in transport, communication, and healthcare.

The neural link

“At the heart of such AI systems you’ll likely find a neural network,” says Van de Burgt – associate professor at the Department of Mechanical Engineering at ������ý.

Neural networks are brain-inspired computer software models. In the human brain, neurons talk to other neurons via synapses, and the more two neurons talk to each other, the stronger the connection between them becomes. In neural network models – which are made of nodes – the strength of a connection between any two nodes is given by a number called the weight.

“Neural networks can help solve complex problems with large amounts of data, but as the networks get larger, they bring increasing energy costs and hardware limitations,” says Van de Burgt. “But there is a promising hardware-based alternative – neuromorphic chips.”

The neuromorphic catch

Like neural networks, neuromorphic chips are inspired by how the brain works but the imitation is taken to a whole new level. In the brain, when the electrical charge in a neuron changes it can then fire and send electrical charges to connected neurons. Neuromorphic chips replicate this process.

“In a neuromorphic chip there are memristors (which is short for memory resistors). These are circuit devices that can ‘remember’ how much electrical charge has flowed through them in the past,’ says Van de Burgt. “And this is exactly what is required for a device modeled on how brain neurons store information and talk to each other.”

But there’s a neuromorphic catch – and it relates to the two ways that people use to train hardware based on neuromorphic chips. In the first way, the training is done on a computer and the weights from the network are mapped to the chip hardware. The alternative is to do the training in-situ or in the hardware, but current devices need to be programmed one by one and then error-checked. This is required because most memristors are stochastic and it’s impossible to update the device without checking it.

“These approaches are costly in terms of time, energy, and computing resources. To really exploit the energy-efficiency of neuromorphic chips, the training needs to be done directly on the neuromorphic chips,” says Van de Burgt.

The masterful proposal

And this is exactly what Van de Burgt and his collaborators at ������ý have achieved and published in a new paper in Science Advances. “This was a real team effort, and all initiated by co-first authors Tim Stevens and Eveline van Doremaele,” Van de Burgt says with pride.

The story of the research can be traced back to the master’s journey of Tim Stevens. “During my master’s research, I became interested in this topic. We have shown that it’s possible to carry out training on hardware only. There’s no need to transfer a trained model to the chip, and this could all lead to more efficient chips for AI applications,” says Stevens.

Van de Burgt, Stevens, and Van Doremaele – who defended her PhD thesis in 2023 on neuromorphic chips – needed a little help along the way with the design of the hardware. So, they turned to Marco Fattori from the Department of Electrical Engineering.

“My group helped with aspects related to circuit design of the chip,” says Fattori. “It was great to work on this multi-disciplinary project where those building the chips get to work with those working on software aspects.”

For Van de Burgt, the project also showed that great ideas can come from any rung on the academic ladder. “Tim saw the potential for using the properties of our devices to a much greater extent during his master’s research. There’s a lesson to be learnt here for all projects.”

The two-layer training

For the researchers, the main challenge was to integrate the key components needed for on-chip training on a single neuromorphic chip. “A major task to solve was the inclusion of the electrochemical random-access memory (EC-RAM) components for example,” says Van de Burgt. “These are the components that mimic the electrical charge storing and firing attributed to neurons in the brain.”

The researchers fabricated a two-layer neural network based on EC-RAM components made from organic materials and tested the hardware with an evolution of the widely used training algorithm backpropagation with gradient descent. “The conventional algorithm is frequently used to improve the accuracy of neural networks, but this is not compatible with our hardware, so we came up with our own version,” says Stevens.

What’s more, with AI in many fields quickly becoming an unsustainable drain of energy resources, the opportunity to train neural networks on hardware components for a fraction of the energy cost is a tempting possibility for many applications – ranging from ChatGPT to weather forecasting.

The future need

While the researchers have demonstrated that the new training approach works, the next logical step is to go bigger, bolder, and better.

“We have shown that this works for a small two-layer network,” says van de Burgt. “Next, we’d like to involve industry and other big research labs so that we can build much larger networks of hardware devices and test them with real-life data problems.”

This next step would allow the researchers to demonstrate that these systems are very efficient in training, as well as running useful neural networks and AI systems. “We’d like to apply this technology in several practical cases,” says Van de Burgt. “My dream is for such technologies to become the norm in AI applications in the future.”

Full paper details

“”, Eveline R. W. van Doremaele, Tim Stevens, Stijn Ringeling, Simone Spolaor, Marco Fattori, and Yoeri van de Burgt, Science Advances, (2024).

Eveline R. W. van Doremaele and Tim Stevens contributed equally to the research and are both considered as first authors of the paper.

Tim Stevens is currently working as a mechanical engineer at , a company co-founded by Marco Fattori.

Media contact

More on AI and Data Science

Latest news

![[Translate to English:] Foto: Bart van Overbeeke Bewerking: Grefo](https://assets.w3.tue.nl/w/fileadmin/_processed_/f/7/csm_hoofdbeeld_def_c49a59b323.jpg)